Add .vscode

This commit is contained in:

parent

04abf4ce4d

commit

2f946d6200

4509 changed files with 459724 additions and 0 deletions

.vscode/extensions/saviorisdead.RustyCode-0.18.0

.codeclimate.yml.travis.yml.vsixmanifestCHANGELOG.mdLICENSEREADME.mdROADMAP.mdgulpfile.js

images

node_modules

.bin

_mochadateformatgulphar-validatorjademkdirpmochasemversshpk-convsshpk-signsshpk-verifystrip-indenttsctslinttsserveruser-homeuuid

amdefine

ansi-regex

ansi-styles

archy

array-differ

array-find-index

array-uniq

asn1

assert-plus

async

aws-sign2

aws4

balanced-match

7

.vscode/extensions/saviorisdead.RustyCode-0.18.0/.codeclimate.yml

vendored

Normal file

7

.vscode/extensions/saviorisdead.RustyCode-0.18.0/.codeclimate.yml

vendored

Normal file

|

|

@ -0,0 +1,7 @@

|

|||

languages:

|

||||

TypeScript: true

|

||||

exclude_paths:

|

||||

- out/**/*

|

||||

- .vscode/*

|

||||

- images/*

|

||||

- typings/**/*

|

||||

9

.vscode/extensions/saviorisdead.RustyCode-0.18.0/.travis.yml

vendored

Normal file

9

.vscode/extensions/saviorisdead.RustyCode-0.18.0/.travis.yml

vendored

Normal file

|

|

@ -0,0 +1,9 @@

|

|||

language: node_js

|

||||

node_js:

|

||||

- "5.0"

|

||||

|

||||

before_script:

|

||||

- npm install -g gulp

|

||||

|

||||

script:

|

||||

- gulp

|

||||

32

.vscode/extensions/saviorisdead.RustyCode-0.18.0/.vsixmanifest

vendored

Normal file

32

.vscode/extensions/saviorisdead.RustyCode-0.18.0/.vsixmanifest

vendored

Normal file

|

|

@ -0,0 +1,32 @@

|

|||

<?xml version="1.0" encoding="utf-8"?>

|

||||

<PackageManifest Version="2.0.0" xmlns="http://schemas.microsoft.com/developer/vsx-schema/2011" xmlns:d="http://schemas.microsoft.com/developer/vsx-schema-design/2011">

|

||||

<Metadata>

|

||||

<Identity Language="en-US" Id="RustyCode" Version="0.18.0" Publisher="saviorisdead"/>

|

||||

<DisplayName>Rusty Code</DisplayName>

|

||||

<Description xml:space="preserve">Rust language integration for VSCode</Description>

|

||||

<Tags>vscode</Tags>

|

||||

<Categories>Languages,Linters,Snippets</Categories>

|

||||

<GalleryFlags>Public</GalleryFlags>

|

||||

<Properties>

|

||||

|

||||

<Property Id="Microsoft.VisualStudio.Services.Links.Source" Value="https://github.com/saviorisdead/RustyCode" />

|

||||

<Property Id="Microsoft.VisualStudio.Services.Links.Getstarted" Value="https://github.com/saviorisdead/RustyCode" />

|

||||

<Property Id="Microsoft.VisualStudio.Services.Links.Repository" Value="https://github.com/saviorisdead/RustyCode" />

|

||||

|

||||

<Property Id="Microsoft.VisualStudio.Services.Links.Support" Value="https://github.com/saviorisdead/RustyCode/issues" />

|

||||

<Property Id="Microsoft.VisualStudio.Services.Links.Learn" Value="https://github.com/saviorisdead/RustyCode" />

|

||||

|

||||

|

||||

</Properties>

|

||||

|

||||

<Icon>extension/images/icon.png</Icon>

|

||||

</Metadata>

|

||||

<Installation>

|

||||

<InstallationTarget Id="Microsoft.VisualStudio.Code"/>

|

||||

</Installation>

|

||||

<Dependencies/>

|

||||

<Assets>

|

||||

<Asset Type="Microsoft.VisualStudio.Code.Manifest" Path="extension/package.json" Addressable="true" />

|

||||

<Asset Type="Microsoft.VisualStudio.Services.Content.Details" Path="extension/README.md" Addressable="true" /><Asset Type="Microsoft.VisualStudio.Services.Icons.Default" Path="extension/images/icon.png" Addressable="true" />

|

||||

</Assets>

|

||||

</PackageManifest>

|

||||

119

.vscode/extensions/saviorisdead.RustyCode-0.18.0/CHANGELOG.md

vendored

Normal file

119

.vscode/extensions/saviorisdead.RustyCode-0.18.0/CHANGELOG.md

vendored

Normal file

|

|

@ -0,0 +1,119 @@

|

|||

# Changelog

|

||||

|

||||

# 0.18.0

|

||||

- Added support for separate check command for libraries (@vhbit)

|

||||

- Added support for exit code 4 when using diff mode in recent(master) versions of rustfmt (@trixnz)

|

||||

|

||||

# 0.17.0

|

||||

- Added support for `rustsym` version 0.3.0 which enables searching for macros (@trixnz)

|

||||

- Added support for JSON error format and new (RUST_NEW_ERROR_FORMAT=true) error style (@trixnz)

|

||||

|

||||

# 0.16.1

|

||||

- Fixed bug with filter for formatting (@trixnz)

|

||||

|

||||

# 0.16.0

|

||||

- Snippets! (@trixnz)

|

||||

- Fix for `rustsym` intergration

|

||||

|

||||

# 0.15.1

|

||||

- Fixed a bug with check on save not working if formatting failed (@trixnz)

|

||||

|

||||

# 0.15.0

|

||||

- Prevent the formatter from running on non-rust code files (@mooman219)

|

||||

- Support for `rustsym` (@trixnz)

|

||||

|

||||

# 0.14.7

|

||||

- Fix for issue when returned by `rustfmt` error code `3` brokes formatting functionality integration (@trixnz)

|

||||

|

||||

# 0.14.6

|

||||

- Fix for `rustfmt` integration (@nlordell)

|

||||

|

||||

# 0.14.5

|

||||

- Small typo fix for settings description (@juanfra684)

|

||||

|

||||

# 0.14.4

|

||||

- Fixes for `rustfmt` integration (@junjieliang)

|

||||

|

||||

# 0.14.3

|

||||

- Small fixes for `cargoHomePath` setting (@saviorisdead)

|

||||

|

||||

# 0.14.2

|

||||

- Fixed some issues with `rustfmt` (@Draivin)

|

||||

- Change extension options format (@fulmicoton)

|

||||

|

||||

# 0.14.1

|

||||

- Preserve focus when opening `racer error` channel (@KalitaAlexey)

|

||||

|

||||

# 0.14.0

|

||||

- Stabilized `rustfmt` functionality (@Draivin)

|

||||

- Bumped version of `vscode` engine (@Draivin)

|

||||

- Added option to specify `CARGO_HOME` via settings (@saviorisdead)

|

||||

|

||||

## 0.13.1

|

||||

- Improved visual style of hover tooltips (@Draivin)

|

||||

|

||||

## 0.13.0

|

||||

- Now it's possible to check Rust code with `cargo build` (@JohanSJA)

|

||||

- Moved indication for racer to status bar (@KalitaAlexey)

|

||||

|

||||

## 0.12.0

|

||||

- Added ability to load and work on multiple crates in one workspace (@KalitaAlexey)

|

||||

- Added ability to display doc-comments in hover popup (@Soaa)

|

||||

- Added `help` and `note` modes to diagnostic detection (@swgillespie)

|

||||

- Various bug fixes and small improvements (@KalitaAlexey, @Soaa, )

|

||||

|

||||

## 0.11.0

|

||||

- Added support for linting via `clippy` (@White-Oak)

|

||||

|

||||

## 0.10.0

|

||||

- Added support for racer `tabbed text` mode.

|

||||

|

||||

## 0.9.1

|

||||

- Fixed bug with missing commands (@KalitaAlexey)

|

||||

|

||||

## 0.9.0

|

||||

- Removed unnecessary warnings (@KalitaAlexey)

|

||||

- Added some default key-bindings (@KalitaAlaexey)

|

||||

|

||||

## 0.8.0

|

||||

- Added linting on save support (@White-Oak)

|

||||

|

||||

## 0.7.1

|

||||

- Fixed bug with incorrect signature help (@henriiik)

|

||||

|

||||

## 0.7.0

|

||||

- Added support for multiline function call signature help (@henriiik)

|

||||

|

||||

## 0.6.0

|

||||

- Added cargo commands for examples (@KalitaAlexey)

|

||||

|

||||

## 0.5.5

|

||||

- Fixed issue with racer crashing on parentheses (@saviorisdead)

|

||||

|

||||

## 0.5.4

|

||||

- Show errors after failed `cargo build` (@henriiik)

|

||||

|

||||

## 0.5.0

|

||||

- Added `cargo terminate` command (@Draivin)

|

||||

|

||||

## 0.4.4

|

||||

- Added standard messaged for missing executables (@Draivin)

|

||||

|

||||

## 0.4.3

|

||||

- Added `cargoPath` option to extenstion options (@saviorisdead)

|

||||

|

||||

## 0.4.2

|

||||

- Clear diagnostic collection on cargo run (@saviorisdead)

|

||||

|

||||

## 0.4.1

|

||||

- Spelling corrected (@skade, @CryZe, @crumblingstatue)

|

||||

- Added `cargo check` command and diagnostic handling to editor (@Draivin)

|

||||

- Added option to view full racer error and restart error automatically (@Draivin)

|

||||

|

||||

## 0.4.0

|

||||

- Various fixes of rustfmt integration (@saviorisdead, @KalitaAlexey, @Draivin)

|

||||

- Cargo commands integration (@saviorisdead)

|

||||

- Tests for formatting (@Draivin)

|

||||

|

||||

## 0.3.3

|

||||

- Fixed bug with formatting using 'rustfmt' (@Draivin)

|

||||

22

.vscode/extensions/saviorisdead.RustyCode-0.18.0/LICENSE

vendored

Normal file

22

.vscode/extensions/saviorisdead.RustyCode-0.18.0/LICENSE

vendored

Normal file

|

|

@ -0,0 +1,22 @@

|

|||

The MIT License (MIT)

|

||||

|

||||

Copyright (c) 2015 Constantine Akhantyev

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

|

||||

80

.vscode/extensions/saviorisdead.RustyCode-0.18.0/README.md

vendored

Normal file

80

.vscode/extensions/saviorisdead.RustyCode-0.18.0/README.md

vendored

Normal file

|

|

@ -0,0 +1,80 @@

|

|||

[](https://travis-ci.org/saviorisdead/RustyCode)

|

||||

|

||||

# Rust for Visual Studio Code (Latest: 0.18.0)

|

||||

|

||||

[Changelog](https://github.com/saviorisdead/RustyCode/blob/master/CHANGELOG.md)

|

||||

|

||||

[Roadmap](https://github.com/saviorisdead/RustyCode/blob/master/ROADMAP.md)

|

||||

|

||||

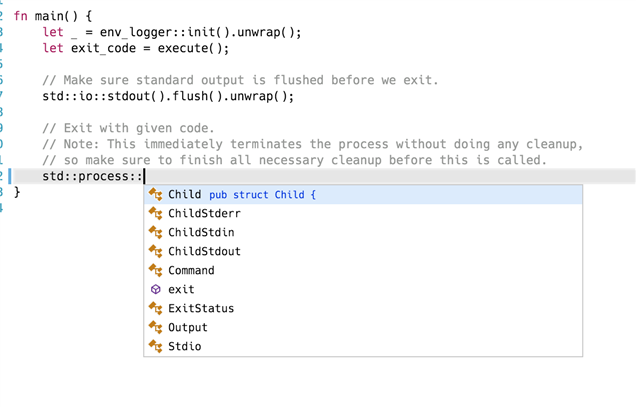

This extension adds advanced language support for the Rust language to VS Code, including:

|

||||

|

||||

- Autocompletion (using `racer`)

|

||||

- Go To Definition (using `racer`)

|

||||

- Go To Symbol (using `rustsym`)

|

||||

- Format (using `rustfmt`)

|

||||

- Linter *checkOnSave is experimental*

|

||||

- Linting can be done via *checkWith is experimental*

|

||||

- `check`. This is the default. Runs rust compiler but skips codegen pass.

|

||||

- `check-lib`. As above, but is limited only to library if project has library + multiple binaries

|

||||

- `clippy` if `cargo-clippy` is installed

|

||||

- `build`

|

||||

- Cargo tasks (Open Command Pallete and they will be there)

|

||||

- Snippets

|

||||

|

||||

|

||||

### IDE Features

|

||||

|

||||

|

||||

## Using

|

||||

|

||||

First, you will need to install Visual Studio Code `1.0` or newer. In the command pallete (`cmd-shift-p`) select `Install Extension` and choose `RustyCode`.

|

||||

|

||||

Then, you need to install Racer (instructions and source code [here](https://github.com/phildawes/racer)). Please, note that we only support latest versions of `Racer`.

|

||||

|

||||

Also, you need to install Rustfmt (instructions and source code [here](https://github.com/rust-lang-nursery/rustfmt))

|

||||

|

||||

And last step is downloading Rust language source files from [here](https://github.com/rust-lang/rust).

|

||||

|

||||

### Options

|

||||

|

||||

The following Visual Studio Code settings are available for the RustyCode extension. These can be set in user preferences or workspace settings (`.vscode/settings.json`)

|

||||

|

||||

```json

|

||||

{

|

||||

"rust.racerPath": null, // Specifies path to Racer binary if it's not in PATH

|

||||

"rust.rustLangSrcPath": null, // Specifies path to /src directory of local copy of Rust sources

|

||||

"rust.rustfmtPath": null, // Specifies path to Rustfmt binary if it's not in PATH

|

||||

"rust.rustsymPath": null, // Specifies path to Rustsym binary if it's not in PATH

|

||||

"rust.cargoPath": null, // Specifies path to Cargo binary if it's not in PATH

|

||||

"rust.cargoHomePath": null, // Path to Cargo home directory, mostly needed for racer. Needed only if using custom rust installation.

|

||||

"rust.formatOnSave": false, // Turn on/off autoformatting file on save (EXPERIMENTAL)

|

||||

"rust.checkOnSave": false, // Turn on/off `cargo check` project on save (EXPERIMENTAL)

|

||||

"rust.checkWith": "build", // Specifies the linter to use. (EXPERIMENTAL)

|

||||

"rust.useJsonErrors": false, // Enable the use of JSON errors (requires Rust 1.7+). Note: This is an unstable feature of Rust and is still in the process of being stablised

|

||||

"rust.useNewErrorFormat": false, // "Use the new Rust error format (RUST_NEW_ERROR_FORMAT=true). Note: This flag is mutually exclusive with `useJsonErrors`.

|

||||

}

|

||||

```

|

||||

|

||||

## Building and Debugging the Extension

|

||||

|

||||

[Repository](https://github.com/saviorisdead/RustyCode)

|

||||

|

||||

You can set up a development enviroment for debugging the extension during extension development.

|

||||

|

||||

First make sure you do not have the extension installed in `~/.vscode/extensions`. Then clone the repo somewhere else on your machine, run `npm install` and open a development instance of Code.

|

||||

|

||||

```bash

|

||||

rm -rf ~/.vscode/extensions/RustyCode

|

||||

cd ~

|

||||

git clone https://github.com/saviorisdead/RustyCode

|

||||

cd RustyCode

|

||||

npm install

|

||||

npm run-script compile

|

||||

code .

|

||||

```

|

||||

|

||||

You can now go to the Debug viewlet and select `Launch Extension` then hit run (`F5`).

|

||||

If you make edits in the extension `.ts` files, just reload (`cmd-r`) the `[Extension Development Host]` instance of Code to load in the new extension code. The debugging instance will automatically reattach.

|

||||

|

||||

## License

|

||||

[MIT](https://github.com/saviorisdead/RustyCode/blob/master/LICENSE)

|

||||

4

.vscode/extensions/saviorisdead.RustyCode-0.18.0/ROADMAP.md

vendored

Normal file

4

.vscode/extensions/saviorisdead.RustyCode-0.18.0/ROADMAP.md

vendored

Normal file

|

|

@ -0,0 +1,4 @@

|

|||

## Roadmap:

|

||||

- Debugging

|

||||

|

||||

Pull requests with suggestions and implementations are welcome.

|

||||

20

.vscode/extensions/saviorisdead.RustyCode-0.18.0/gulpfile.js

vendored

Normal file

20

.vscode/extensions/saviorisdead.RustyCode-0.18.0/gulpfile.js

vendored

Normal file

|

|

@ -0,0 +1,20 @@

|

|||

var gulp = require('gulp');

|

||||

var tslint = require('gulp-tslint');

|

||||

var shell = require('gulp-shell');

|

||||

|

||||

var files = {

|

||||

src: 'src/**/*.ts',

|

||||

test: 'test/**/*.ts'

|

||||

};

|

||||

|

||||

gulp.task('compile', shell.task([

|

||||

'node ./node_modules/vscode/bin/compile -p ./'

|

||||

]));

|

||||

|

||||

gulp.task('tslint', function() {

|

||||

return gulp.src([files.src, files.test, '!test/index.ts'])

|

||||

.pipe(tslint())

|

||||

.pipe(tslint.report('verbose'));

|

||||

});

|

||||

|

||||

gulp.task('default', ['compile', 'tslint']);

|

||||

BIN

.vscode/extensions/saviorisdead.RustyCode-0.18.0/images/icon.png

vendored

Normal file

BIN

.vscode/extensions/saviorisdead.RustyCode-0.18.0/images/icon.png

vendored

Normal file

Binary file not shown.

|

After (image error) Size: 5.6 KiB |

BIN

.vscode/extensions/saviorisdead.RustyCode-0.18.0/images/ide_features.png

vendored

Normal file

BIN

.vscode/extensions/saviorisdead.RustyCode-0.18.0/images/ide_features.png

vendored

Normal file

Binary file not shown.

|

After

(image error) Size: 49 KiB |

499

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/_mocha

generated

vendored

Executable file

499

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/_mocha

generated

vendored

Executable file

|

|

@ -0,0 +1,499 @@

|

|||

#!/usr/bin/env node

|

||||

|

||||

/**

|

||||

* Module dependencies.

|

||||

*/

|

||||

|

||||

var program = require('commander'),

|

||||

path = require('path'),

|

||||

fs = require('fs'),

|

||||

resolve = path.resolve,

|

||||

exists = fs.existsSync || path.existsSync,

|

||||

Mocha = require('../'),

|

||||

utils = Mocha.utils,

|

||||

join = path.join,

|

||||

cwd = process.cwd(),

|

||||

getOptions = require('./options'),

|

||||

mocha = new Mocha;

|

||||

|

||||

/**

|

||||

* Save timer references to avoid Sinon interfering (see GH-237).

|

||||

*/

|

||||

|

||||

var Date = global.Date

|

||||

, setTimeout = global.setTimeout

|

||||

, setInterval = global.setInterval

|

||||

, clearTimeout = global.clearTimeout

|

||||

, clearInterval = global.clearInterval;

|

||||

|

||||

/**

|

||||

* Files.

|

||||

*/

|

||||

|

||||

var files = [];

|

||||

|

||||

/**

|

||||

* Globals.

|

||||

*/

|

||||

|

||||

var globals = [];

|

||||

|

||||

/**

|

||||

* Requires.

|

||||

*/

|

||||

|

||||

var requires = [];

|

||||

|

||||

/**

|

||||

* Images.

|

||||

*/

|

||||

|

||||

var images = {

|

||||

fail: __dirname + '/../images/error.png'

|

||||

, pass: __dirname + '/../images/ok.png'

|

||||

};

|

||||

|

||||

// options

|

||||

|

||||

program

|

||||

.version(JSON.parse(fs.readFileSync(__dirname + '/../package.json', 'utf8')).version)

|

||||

.usage('[debug] [options] [files]')

|

||||

.option('-A, --async-only', "force all tests to take a callback (async) or return a promise")

|

||||

.option('-c, --colors', 'force enabling of colors')

|

||||

.option('-C, --no-colors', 'force disabling of colors')

|

||||

.option('-G, --growl', 'enable growl notification support')

|

||||

.option('-O, --reporter-options <k=v,k2=v2,...>', 'reporter-specific options')

|

||||

.option('-R, --reporter <name>', 'specify the reporter to use', 'spec')

|

||||

.option('-S, --sort', "sort test files")

|

||||

.option('-b, --bail', "bail after first test failure")

|

||||

.option('-d, --debug', "enable node's debugger, synonym for node --debug")

|

||||

.option('-g, --grep <pattern>', 'only run tests matching <pattern>')

|

||||

.option('-f, --fgrep <string>', 'only run tests containing <string>')

|

||||

.option('-gc, --expose-gc', 'expose gc extension')

|

||||

.option('-i, --invert', 'inverts --grep and --fgrep matches')

|

||||

.option('-r, --require <name>', 'require the given module')

|

||||

.option('-s, --slow <ms>', '"slow" test threshold in milliseconds [75]')

|

||||

.option('-t, --timeout <ms>', 'set test-case timeout in milliseconds [2000]')

|

||||

.option('-u, --ui <name>', 'specify user-interface (bdd|tdd|exports)', 'bdd')

|

||||

.option('-w, --watch', 'watch files for changes')

|

||||

.option('--check-leaks', 'check for global variable leaks')

|

||||

.option('--full-trace', 'display the full stack trace')

|

||||

.option('--compilers <ext>:<module>,...', 'use the given module(s) to compile files', list, [])

|

||||

.option('--debug-brk', "enable node's debugger breaking on the first line")

|

||||

.option('--globals <names>', 'allow the given comma-delimited global [names]', list, [])

|

||||

.option('--es_staging', 'enable all staged features')

|

||||

.option('--harmony<_classes,_generators,...>', 'all node --harmony* flags are available')

|

||||

.option('--inline-diffs', 'display actual/expected differences inline within each string')

|

||||

.option('--interfaces', 'display available interfaces')

|

||||

.option('--no-deprecation', 'silence deprecation warnings')

|

||||

.option('--no-exit', 'require a clean shutdown of the event loop: mocha will not call process.exit')

|

||||

.option('--no-timeouts', 'disables timeouts, given implicitly with --debug')

|

||||

.option('--opts <path>', 'specify opts path', 'test/mocha.opts')

|

||||

.option('--perf-basic-prof', 'enable perf linux profiler (basic support)')

|

||||

.option('--prof', 'log statistical profiling information')

|

||||

.option('--log-timer-events', 'Time events including external callbacks')

|

||||

.option('--recursive', 'include sub directories')

|

||||

.option('--reporters', 'display available reporters')

|

||||

.option('--retries <times>', 'set numbers of time to retry a failed test case')

|

||||

.option('--throw-deprecation', 'throw an exception anytime a deprecated function is used')

|

||||

.option('--trace', 'trace function calls')

|

||||

.option('--trace-deprecation', 'show stack traces on deprecations')

|

||||

.option('--use_strict', 'enforce strict mode')

|

||||

.option('--watch-extensions <ext>,...', 'additional extensions to monitor with --watch', list, [])

|

||||

.option('--delay', 'wait for async suite definition')

|

||||

|

||||

program.name = 'mocha';

|

||||

|

||||

// init command

|

||||

|

||||

program

|

||||

.command('init <path>')

|

||||

.description('initialize a client-side mocha setup at <path>')

|

||||

.action(function(path){

|

||||

var mkdir = require('mkdirp');

|

||||

mkdir.sync(path);

|

||||

var css = fs.readFileSync(join(__dirname, '..', 'mocha.css'));

|

||||

var js = fs.readFileSync(join(__dirname, '..', 'mocha.js'));

|

||||

var tmpl = fs.readFileSync(join(__dirname, '..', 'lib/template.html'));

|

||||

fs.writeFileSync(join(path, 'mocha.css'), css);

|

||||

fs.writeFileSync(join(path, 'mocha.js'), js);

|

||||

fs.writeFileSync(join(path, 'tests.js'), '');

|

||||

fs.writeFileSync(join(path, 'index.html'), tmpl);

|

||||

process.exit(0);

|

||||

});

|

||||

|

||||

// --globals

|

||||

|

||||

program.on('globals', function(val){

|

||||

globals = globals.concat(list(val));

|

||||

});

|

||||

|

||||

// --reporters

|

||||

|

||||

program.on('reporters', function(){

|

||||

console.log();

|

||||

console.log(' dot - dot matrix');

|

||||

console.log(' doc - html documentation');

|

||||

console.log(' spec - hierarchical spec list');

|

||||

console.log(' json - single json object');

|

||||

console.log(' progress - progress bar');

|

||||

console.log(' list - spec-style listing');

|

||||

console.log(' tap - test-anything-protocol');

|

||||

console.log(' landing - unicode landing strip');

|

||||

console.log(' xunit - xunit reporter');

|

||||

console.log(' html-cov - HTML test coverage');

|

||||

console.log(' json-cov - JSON test coverage');

|

||||

console.log(' min - minimal reporter (great with --watch)');

|

||||

console.log(' json-stream - newline delimited json events');

|

||||

console.log(' markdown - markdown documentation (github flavour)');

|

||||

console.log(' nyan - nyan cat!');

|

||||

console.log();

|

||||

process.exit();

|

||||

});

|

||||

|

||||

// --interfaces

|

||||

|

||||

program.on('interfaces', function(){

|

||||

console.log('');

|

||||

console.log(' bdd');

|

||||

console.log(' tdd');

|

||||

console.log(' qunit');

|

||||

console.log(' exports');

|

||||

console.log('');

|

||||

process.exit();

|

||||

});

|

||||

|

||||

// -r, --require

|

||||

|

||||

module.paths.push(cwd, join(cwd, 'node_modules'));

|

||||

|

||||

program.on('require', function(mod){

|

||||

var abs = exists(mod) || exists(mod + '.js');

|

||||

if (abs) mod = resolve(mod);

|

||||

requires.push(mod);

|

||||

});

|

||||

|

||||

// If not already done, load mocha.opts

|

||||

if (!process.env.LOADED_MOCHA_OPTS) {

|

||||

getOptions();

|

||||

}

|

||||

|

||||

// parse args

|

||||

|

||||

program.parse(process.argv);

|

||||

|

||||

// infinite stack traces

|

||||

|

||||

Error.stackTraceLimit = Infinity; // TODO: config

|

||||

|

||||

// reporter options

|

||||

|

||||

var reporterOptions = {};

|

||||

if (program.reporterOptions !== undefined) {

|

||||

program.reporterOptions.split(",").forEach(function(opt) {

|

||||

var L = opt.split("=");

|

||||

if (L.length > 2 || L.length === 0) {

|

||||

throw new Error("invalid reporter option '" + opt + "'");

|

||||

} else if (L.length === 2) {

|

||||

reporterOptions[L[0]] = L[1];

|

||||

} else {

|

||||

reporterOptions[L[0]] = true;

|

||||

}

|

||||

});

|

||||

}

|

||||

|

||||

// reporter

|

||||

|

||||

mocha.reporter(program.reporter, reporterOptions);

|

||||

|

||||

// load reporter

|

||||

|

||||

var Reporter = null;

|

||||

try {

|

||||

Reporter = require('../lib/reporters/' + program.reporter);

|

||||

} catch (err) {

|

||||

try {

|

||||

Reporter = require(program.reporter);

|

||||

} catch (err) {

|

||||

throw new Error('reporter "' + program.reporter + '" does not exist');

|

||||

}

|

||||

}

|

||||

|

||||

// --no-colors

|

||||

|

||||

if (!program.colors) mocha.useColors(false);

|

||||

|

||||

// --colors

|

||||

|

||||

if (~process.argv.indexOf('--colors') ||

|

||||

~process.argv.indexOf('-c')) {

|

||||

mocha.useColors(true);

|

||||

}

|

||||

|

||||

// --inline-diffs

|

||||

|

||||

if (program.inlineDiffs) mocha.useInlineDiffs(true);

|

||||

|

||||

// --slow <ms>

|

||||

|

||||

if (program.slow) mocha.suite.slow(program.slow);

|

||||

|

||||

// --no-timeouts

|

||||

|

||||

if (!program.timeouts) mocha.enableTimeouts(false);

|

||||

|

||||

// --timeout

|

||||

|

||||

if (program.timeout) mocha.suite.timeout(program.timeout);

|

||||

|

||||

// --bail

|

||||

|

||||

mocha.suite.bail(program.bail);

|

||||

|

||||

// --grep

|

||||

|

||||

if (program.grep) mocha.grep(new RegExp(program.grep));

|

||||

|

||||

// --fgrep

|

||||

|

||||

if (program.fgrep) mocha.grep(program.fgrep);

|

||||

|

||||

// --invert

|

||||

|

||||

if (program.invert) mocha.invert();

|

||||

|

||||

// --check-leaks

|

||||

|

||||

if (program.checkLeaks) mocha.checkLeaks();

|

||||

|

||||

// --stack-trace

|

||||

|

||||

if(program.fullTrace) mocha.fullTrace();

|

||||

|

||||

// --growl

|

||||

|

||||

if (program.growl) mocha.growl();

|

||||

|

||||

// --async-only

|

||||

|

||||

if (program.asyncOnly) mocha.asyncOnly();

|

||||

|

||||

// --delay

|

||||

|

||||

if (program.delay) mocha.delay();

|

||||

|

||||

// --globals

|

||||

|

||||

mocha.globals(globals);

|

||||

|

||||

// --retries

|

||||

|

||||

if (program.retries) mocha.suite.retries(program.retries);

|

||||

|

||||

// custom compiler support

|

||||

|

||||

var extensions = ['js'];

|

||||

program.compilers.forEach(function(c) {

|

||||

var compiler = c.split(':')

|

||||

, ext = compiler[0]

|

||||

, mod = compiler[1];

|

||||

|

||||

if (mod[0] == '.') mod = join(process.cwd(), mod);

|

||||

require(mod);

|

||||

extensions.push(ext);

|

||||

program.watchExtensions.push(ext);

|

||||

});

|

||||

|

||||

// requires

|

||||

|

||||

requires.forEach(function(mod) {

|

||||

require(mod);

|

||||

});

|

||||

|

||||

// interface

|

||||

|

||||

mocha.ui(program.ui);

|

||||

|

||||

//args

|

||||

|

||||

var args = program.args;

|

||||

|

||||

// default files to test/*.{js,coffee}

|

||||

|

||||

if (!args.length) args.push('test');

|

||||

|

||||

args.forEach(function(arg){

|

||||

files = files.concat(utils.lookupFiles(arg, extensions, program.recursive));

|

||||

});

|

||||

|

||||

// resolve

|

||||

|

||||

files = files.map(function(path){

|

||||

return resolve(path);

|

||||

});

|

||||

|

||||

if (program.sort) {

|

||||

files.sort();

|

||||

}

|

||||

|

||||

// --watch

|

||||

|

||||

var runner;

|

||||

if (program.watch) {

|

||||

console.log();

|

||||

hideCursor();

|

||||

process.on('SIGINT', function(){

|

||||

showCursor();

|

||||

console.log('\n');

|

||||

process.exit();

|

||||

});

|

||||

|

||||

|

||||

var watchFiles = utils.files(cwd, [ 'js' ].concat(program.watchExtensions));

|

||||

var runAgain = false;

|

||||

|

||||

function loadAndRun() {

|

||||

try {

|

||||

mocha.files = files;

|

||||

runAgain = false;

|

||||

runner = mocha.run(function(){

|

||||

runner = null;

|

||||

if (runAgain) {

|

||||

rerun();

|

||||

}

|

||||

});

|

||||

} catch(e) {

|

||||

console.log(e.stack);

|

||||

}

|

||||

}

|

||||

|

||||

function purge() {

|

||||

watchFiles.forEach(function(file){

|

||||

delete require.cache[file];

|

||||

});

|

||||

}

|

||||

|

||||

loadAndRun();

|

||||

|

||||

function rerun() {

|

||||

purge();

|

||||

stop()

|

||||

if (!program.grep)

|

||||

mocha.grep(null);

|

||||

mocha.suite = mocha.suite.clone();

|

||||

mocha.suite.ctx = new Mocha.Context;

|

||||

mocha.ui(program.ui);

|

||||

loadAndRun();

|

||||

}

|

||||

|

||||

utils.watch(watchFiles, function(){

|

||||

runAgain = true;

|

||||

if (runner) {

|

||||

runner.abort();

|

||||

} else {

|

||||

rerun();

|

||||

}

|

||||

});

|

||||

|

||||

} else {

|

||||

|

||||

// load

|

||||

|

||||

mocha.files = files;

|

||||

runner = mocha.run(program.exit ? exit : exitLater);

|

||||

|

||||

}

|

||||

|

||||

function exitLater(code) {

|

||||

process.on('exit', function() { process.exit(code) })

|

||||

}

|

||||

|

||||

function exit(code) {

|

||||

// flush output for Node.js Windows pipe bug

|

||||

// https://github.com/joyent/node/issues/6247 is just one bug example

|

||||

// https://github.com/visionmedia/mocha/issues/333 has a good discussion

|

||||

function done() {

|

||||

if (!(draining--)) process.exit(code);

|

||||

}

|

||||

|

||||

var draining = 0;

|

||||

var streams = [process.stdout, process.stderr];

|

||||

|

||||

streams.forEach(function(stream){

|

||||

// submit empty write request and wait for completion

|

||||

draining += 1;

|

||||

stream.write('', done);

|

||||

});

|

||||

|

||||

done();

|

||||

}

|

||||

|

||||

process.on('SIGINT', function() { runner.abort(); })

|

||||

|

||||

// enable growl notifications

|

||||

|

||||

function growl(runner, reporter) {

|

||||

var notify = require('growl');

|

||||

|

||||

runner.on('end', function(){

|

||||

var stats = reporter.stats;

|

||||

if (stats.failures) {

|

||||

var msg = stats.failures + ' of ' + runner.total + ' tests failed';

|

||||

notify(msg, { name: 'mocha', title: 'Failed', image: images.fail });

|

||||

} else {

|

||||

notify(stats.passes + ' tests passed in ' + stats.duration + 'ms', {

|

||||

name: 'mocha'

|

||||

, title: 'Passed'

|

||||

, image: images.pass

|

||||

});

|

||||

}

|

||||

});

|

||||

}

|

||||

|

||||

/**

|

||||

* Parse list.

|

||||

*/

|

||||

|

||||

function list(str) {

|

||||

return str.split(/ *, */);

|

||||

}

|

||||

|

||||

/**

|

||||

* Hide the cursor.

|

||||

*/

|

||||

|

||||

function hideCursor(){

|

||||

process.stdout.write('\u001b[?25l');

|

||||

}

|

||||

|

||||

/**

|

||||

* Show the cursor.

|

||||

*/

|

||||

|

||||

function showCursor(){

|

||||

process.stdout.write('\u001b[?25h');

|

||||

}

|

||||

|

||||

/**

|

||||

* Stop play()ing.

|

||||

*/

|

||||

|

||||

function stop() {

|

||||

process.stdout.write('\u001b[2K');

|

||||

clearInterval(play.timer);

|

||||

}

|

||||

|

||||

/**

|

||||

* Play the given array of strings.

|

||||

*/

|

||||

|

||||

function play(arr, interval) {

|

||||

var len = arr.length

|

||||

, interval = interval || 100

|

||||

, i = 0;

|

||||

|

||||

play.timer = setInterval(function(){

|

||||

var str = arr[i++ % len];

|

||||

process.stdout.write('\u001b[0G' + str);

|

||||

}, interval);

|

||||

}

|

||||

75

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/dateformat

generated

vendored

Executable file

75

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/dateformat

generated

vendored

Executable file

|

|

@ -0,0 +1,75 @@

|

|||

#!/usr/bin/env node

|

||||

/**

|

||||

* dateformat <https://github.com/felixge/node-dateformat>

|

||||

*

|

||||

* Copyright (c) 2014 Charlike Mike Reagent (cli), contributors.

|

||||

* Released under the MIT license.

|

||||

*/

|

||||

|

||||

'use strict';

|

||||

|

||||

/**

|

||||

* Module dependencies.

|

||||

*/

|

||||

|

||||

var dateFormat = require('../lib/dateformat');

|

||||

var meow = require('meow');

|

||||

var stdin = require('get-stdin');

|

||||

|

||||

var cli = meow({

|

||||

pkg: '../package.json',

|

||||

help: [

|

||||

'Options',

|

||||

' --help Show this help',

|

||||

' --version Current version of package',

|

||||

' -d | --date Date that want to format (Date object as Number or String)',

|

||||

' -m | --mask Mask that will use to format the date',

|

||||

' -u | --utc Convert local time to UTC time or use `UTC:` prefix in mask',

|

||||

' -g | --gmt You can use `GMT:` prefix in mask',

|

||||

'',

|

||||

'Usage',

|

||||

' dateformat [date] [mask]',

|

||||

' dateformat "Nov 26 2014" "fullDate"',

|

||||

' dateformat 1416985417095 "dddd, mmmm dS, yyyy, h:MM:ss TT"',

|

||||

' dateformat 1315361943159 "W"',

|

||||

' dateformat "UTC:h:MM:ss TT Z"',

|

||||

' dateformat "longTime" true',

|

||||

' dateformat "longTime" false true',

|

||||

' dateformat "Jun 9 2007" "fullDate" true',

|

||||

' date +%s | dateformat',

|

||||

''

|

||||

].join('\n')

|

||||

})

|

||||

|

||||

var date = cli.input[0] || cli.flags.d || cli.flags.date || Date.now();

|

||||

var mask = cli.input[1] || cli.flags.m || cli.flags.mask || dateFormat.masks.default;

|

||||

var utc = cli.input[2] || cli.flags.u || cli.flags.utc || false;

|

||||

var gmt = cli.input[3] || cli.flags.g || cli.flags.gmt || false;

|

||||

|

||||

utc = utc === 'true' ? true : false;

|

||||

gmt = gmt === 'true' ? true : false;

|

||||

|

||||

if (!cli.input.length) {

|

||||

stdin(function(date) {

|

||||

console.log(dateFormat(date, dateFormat.masks.default, utc, gmt));

|

||||

});

|

||||

return;

|

||||

}

|

||||

|

||||

if (cli.input.length === 1 && date) {

|

||||

mask = date;

|

||||

date = Date.now();

|

||||

console.log(dateFormat(date, mask, utc, gmt));

|

||||

return;

|

||||

}

|

||||

|

||||

if (cli.input.length >= 2 && date && mask) {

|

||||

if (mask === 'true' || mask === 'false') {

|

||||

utc = mask === 'true' ? true : false;

|

||||

gmt = !utc;

|

||||

mask = date

|

||||

date = Date.now();

|

||||

}

|

||||

console.log(dateFormat(date, mask, utc, gmt));

|

||||

return;

|

||||

}

|

||||

212

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/gulp

generated

vendored

Executable file

212

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/gulp

generated

vendored

Executable file

|

|

@ -0,0 +1,212 @@

|

|||

#!/usr/bin/env node

|

||||

|

||||

'use strict';

|

||||

var gutil = require('gulp-util');

|

||||

var prettyTime = require('pretty-hrtime');

|

||||

var chalk = require('chalk');

|

||||

var semver = require('semver');

|

||||

var archy = require('archy');

|

||||

var Liftoff = require('liftoff');

|

||||

var tildify = require('tildify');

|

||||

var interpret = require('interpret');

|

||||

var v8flags = require('v8flags');

|

||||

var completion = require('../lib/completion');

|

||||

var argv = require('minimist')(process.argv.slice(2));

|

||||

var taskTree = require('../lib/taskTree');

|

||||

|

||||

// Set env var for ORIGINAL cwd

|

||||

// before anything touches it

|

||||

process.env.INIT_CWD = process.cwd();

|

||||

|

||||

var cli = new Liftoff({

|

||||

name: 'gulp',

|

||||

completions: completion,

|

||||

extensions: interpret.jsVariants,

|

||||

v8flags: v8flags,

|

||||

});

|

||||

|

||||

// Exit with 0 or 1

|

||||

var failed = false;

|

||||

process.once('exit', function(code) {

|

||||

if (code === 0 && failed) {

|

||||

process.exit(1);

|

||||

}

|

||||

});

|

||||

|

||||

// Parse those args m8

|

||||

var cliPackage = require('../package');

|

||||

var versionFlag = argv.v || argv.version;

|

||||

var tasksFlag = argv.T || argv.tasks;

|

||||

var tasks = argv._;

|

||||

var toRun = tasks.length ? tasks : ['default'];

|

||||

|

||||

// This is a hold-over until we have a better logging system

|

||||

// with log levels

|

||||

var simpleTasksFlag = argv['tasks-simple'];

|

||||

var shouldLog = !argv.silent && !simpleTasksFlag;

|

||||

|

||||

if (!shouldLog) {

|

||||

gutil.log = function() {};

|

||||

}

|

||||

|

||||

cli.on('require', function(name) {

|

||||

gutil.log('Requiring external module', chalk.magenta(name));

|

||||

});

|

||||

|

||||

cli.on('requireFail', function(name) {

|

||||

gutil.log(chalk.red('Failed to load external module'), chalk.magenta(name));

|

||||

});

|

||||

|

||||

cli.on('respawn', function(flags, child) {

|

||||

var nodeFlags = chalk.magenta(flags.join(', '));

|

||||

var pid = chalk.magenta(child.pid);

|

||||

gutil.log('Node flags detected:', nodeFlags);

|

||||

gutil.log('Respawned to PID:', pid);

|

||||

});

|

||||

|

||||

cli.launch({

|

||||

cwd: argv.cwd,

|

||||

configPath: argv.gulpfile,

|

||||

require: argv.require,

|

||||

completion: argv.completion,

|

||||

}, handleArguments);

|

||||

|

||||

// The actual logic

|

||||

function handleArguments(env) {

|

||||

if (versionFlag && tasks.length === 0) {

|

||||

gutil.log('CLI version', cliPackage.version);

|

||||

if (env.modulePackage && typeof env.modulePackage.version !== 'undefined') {

|

||||

gutil.log('Local version', env.modulePackage.version);

|

||||

}

|

||||

process.exit(0);

|

||||

}

|

||||

|

||||

if (!env.modulePath) {

|

||||

gutil.log(

|

||||

chalk.red('Local gulp not found in'),

|

||||

chalk.magenta(tildify(env.cwd))

|

||||

);

|

||||

gutil.log(chalk.red('Try running: npm install gulp'));

|

||||

process.exit(1);

|

||||

}

|

||||

|

||||

if (!env.configPath) {

|

||||

gutil.log(chalk.red('No gulpfile found'));

|

||||

process.exit(1);

|

||||

}

|

||||

|

||||

// Check for semver difference between cli and local installation

|

||||

if (semver.gt(cliPackage.version, env.modulePackage.version)) {

|

||||

gutil.log(chalk.red('Warning: gulp version mismatch:'));

|

||||

gutil.log(chalk.red('Global gulp is', cliPackage.version));

|

||||

gutil.log(chalk.red('Local gulp is', env.modulePackage.version));

|

||||

}

|

||||

|

||||

// Chdir before requiring gulpfile to make sure

|

||||

// we let them chdir as needed

|

||||

if (process.cwd() !== env.cwd) {

|

||||

process.chdir(env.cwd);

|

||||

gutil.log(

|

||||

'Working directory changed to',

|

||||

chalk.magenta(tildify(env.cwd))

|

||||

);

|

||||

}

|

||||

|

||||

// This is what actually loads up the gulpfile

|

||||

require(env.configPath);

|

||||

gutil.log('Using gulpfile', chalk.magenta(tildify(env.configPath)));

|

||||

|

||||

var gulpInst = require(env.modulePath);

|

||||

logEvents(gulpInst);

|

||||

|

||||

process.nextTick(function() {

|

||||

if (simpleTasksFlag) {

|

||||

return logTasksSimple(env, gulpInst);

|

||||

}

|

||||

if (tasksFlag) {

|

||||

return logTasks(env, gulpInst);

|

||||

}

|

||||

gulpInst.start.apply(gulpInst, toRun);

|

||||

});

|

||||

}

|

||||

|

||||

function logTasks(env, localGulp) {

|

||||

var tree = taskTree(localGulp.tasks);

|

||||

tree.label = 'Tasks for ' + chalk.magenta(tildify(env.configPath));

|

||||

archy(tree)

|

||||

.split('\n')

|

||||

.forEach(function(v) {

|

||||

if (v.trim().length === 0) {

|

||||

return;

|

||||

}

|

||||

gutil.log(v);

|

||||

});

|

||||

}

|

||||

|

||||

function logTasksSimple(env, localGulp) {

|

||||

console.log(Object.keys(localGulp.tasks)

|

||||

.join('\n')

|

||||

.trim());

|

||||

}

|

||||

|

||||

// Format orchestrator errors

|

||||

function formatError(e) {

|

||||

if (!e.err) {

|

||||

return e.message;

|

||||

}

|

||||

|

||||

// PluginError

|

||||

if (typeof e.err.showStack === 'boolean') {

|

||||

return e.err.toString();

|

||||

}

|

||||

|

||||

// Normal error

|

||||

if (e.err.stack) {

|

||||

return e.err.stack;

|

||||

}

|

||||

|

||||

// Unknown (string, number, etc.)

|

||||

return new Error(String(e.err)).stack;

|

||||

}

|

||||

|

||||

// Wire up logging events

|

||||

function logEvents(gulpInst) {

|

||||

|

||||

// Total hack due to poor error management in orchestrator

|

||||

gulpInst.on('err', function() {

|

||||

failed = true;

|

||||

});

|

||||

|

||||

gulpInst.on('task_start', function(e) {

|

||||

// TODO: batch these

|

||||

// so when 5 tasks start at once it only logs one time with all 5

|

||||

gutil.log('Starting', '\'' + chalk.cyan(e.task) + '\'...');

|

||||

});

|

||||

|

||||

gulpInst.on('task_stop', function(e) {

|

||||

var time = prettyTime(e.hrDuration);

|

||||

gutil.log(

|

||||

'Finished', '\'' + chalk.cyan(e.task) + '\'',

|

||||

'after', chalk.magenta(time)

|

||||

);

|

||||

});

|

||||

|

||||

gulpInst.on('task_err', function(e) {

|

||||

var msg = formatError(e);

|

||||

var time = prettyTime(e.hrDuration);

|

||||

gutil.log(

|

||||

'\'' + chalk.cyan(e.task) + '\'',

|

||||

chalk.red('errored after'),

|

||||

chalk.magenta(time)

|

||||

);

|

||||

gutil.log(msg);

|

||||

});

|

||||

|

||||

gulpInst.on('task_not_found', function(err) {

|

||||

gutil.log(

|

||||

chalk.red('Task \'' + err.task + '\' is not in your gulpfile')

|

||||

);

|

||||

gutil.log('Please check the documentation for proper gulpfile formatting');

|

||||

process.exit(1);

|

||||

});

|

||||

}

|

||||

45

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/har-validator

generated

vendored

Executable file

45

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/har-validator

generated

vendored

Executable file

|

|

@ -0,0 +1,45 @@

|

|||

#!/usr/bin/env node

|

||||

|

||||

'use strict'

|

||||

|

||||

var Promise = require('bluebird')

|

||||

|

||||

var chalk = require('chalk')

|

||||

var cmd = require('commander')

|

||||

var fs = Promise.promisifyAll(require('fs'))

|

||||

var path = require('path')

|

||||

var pkg = require('../package.json')

|

||||

var validate = Promise.promisifyAll(require('..'))

|

||||

|

||||

cmd

|

||||

.version(pkg.version)

|

||||

.usage('[options] <files ...>')

|

||||

.option('-s, --schema [name]', 'validate schema name (log, request, response, etc ...)')

|

||||

.parse(process.argv)

|

||||

|

||||

if (!cmd.args.length) {

|

||||

cmd.help()

|

||||

}

|

||||

|

||||

if (!cmd.schema) {

|

||||

cmd.schema = 'har'

|

||||

}

|

||||

|

||||

cmd.args.map(function (fileName) {

|

||||

var file = chalk.yellow.italic(path.basename(fileName))

|

||||

|

||||

fs.readFileAsync(fileName)

|

||||

.then(JSON.parse)

|

||||

.then(validate[cmd.schema + 'Async'])

|

||||

.then(function () {

|

||||

console.log('%s [%s] is valid', chalk.green('✓'), file)

|

||||

})

|

||||

.catch(SyntaxError, function (e) {

|

||||

console.error('%s [%s] failed to read JSON: %s', chalk.red('✖'), file, chalk.red(e.message))

|

||||

})

|

||||

.catch(function (e) {

|

||||

e.errors.map(function (err) {

|

||||

console.error('%s [%s] failed validation: (%s: %s) %s', chalk.red('✖'), file, chalk.cyan.italic(err.field), chalk.magenta.italic(err.value), chalk.red(err.message))

|

||||

})

|

||||

})

|

||||

})

|

||||

147

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/jade

generated

vendored

Executable file

147

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/jade

generated

vendored

Executable file

|

|

@ -0,0 +1,147 @@

|

|||

#!/usr/bin/env node

|

||||

|

||||

/**

|

||||

* Module dependencies.

|

||||

*/

|

||||

|

||||

var fs = require('fs')

|

||||

, program = require('commander')

|

||||

, path = require('path')

|

||||

, basename = path.basename

|

||||

, dirname = path.dirname

|

||||

, resolve = path.resolve

|

||||

, join = path.join

|

||||

, mkdirp = require('mkdirp')

|

||||

, jade = require('../');

|

||||

|

||||

// jade options

|

||||

|

||||

var options = {};

|

||||

|

||||

// options

|

||||

|

||||

program

|

||||

.version(jade.version)

|

||||

.usage('[options] [dir|file ...]')

|

||||

.option('-o, --obj <str>', 'javascript options object')

|

||||

.option('-O, --out <dir>', 'output the compiled html to <dir>')

|

||||

.option('-p, --path <path>', 'filename used to resolve includes')

|

||||

.option('-P, --pretty', 'compile pretty html output')

|

||||

.option('-c, --client', 'compile for client-side runtime.js')

|

||||

.option('-D, --no-debug', 'compile without debugging (smaller functions)')

|

||||

|

||||

program.on('--help', function(){

|

||||

console.log(' Examples:');

|

||||

console.log('');

|

||||

console.log(' # translate jade the templates dir');

|

||||

console.log(' $ jade templates');

|

||||

console.log('');

|

||||

console.log(' # create {foo,bar}.html');

|

||||

console.log(' $ jade {foo,bar}.jade');

|

||||

console.log('');

|

||||

console.log(' # jade over stdio');

|

||||

console.log(' $ jade < my.jade > my.html');

|

||||

console.log('');

|

||||

console.log(' # jade over stdio');

|

||||

console.log(' $ echo "h1 Jade!" | jade');

|

||||

console.log('');

|

||||

console.log(' # foo, bar dirs rendering to /tmp');

|

||||

console.log(' $ jade foo bar --out /tmp ');

|

||||

console.log('');

|

||||

});

|

||||

|

||||

program.parse(process.argv);

|

||||

|

||||

// options given, parse them

|

||||

|

||||

if (program.obj) options = eval('(' + program.obj + ')');

|

||||

|

||||

// --filename

|

||||

|

||||

if (program.path) options.filename = program.path;

|

||||

|

||||

// --no-debug

|

||||

|

||||

options.compileDebug = program.debug;

|

||||

|

||||

// --client

|

||||

|

||||

options.client = program.client;

|

||||

|

||||

// --pretty

|

||||

|

||||

options.pretty = program.pretty;

|

||||

|

||||

// left-over args are file paths

|

||||

|

||||

var files = program.args;

|

||||

|

||||

// compile files

|

||||

|

||||

if (files.length) {

|

||||

console.log();

|

||||

files.forEach(renderFile);

|

||||

process.on('exit', console.log);

|

||||

// stdio

|

||||

} else {

|

||||

stdin();

|

||||

}

|

||||

|

||||

/**

|

||||

* Compile from stdin.

|

||||

*/

|

||||

|

||||

function stdin() {

|

||||

var buf = '';

|

||||

process.stdin.setEncoding('utf8');

|

||||

process.stdin.on('data', function(chunk){ buf += chunk; });

|

||||

process.stdin.on('end', function(){

|

||||

var fn = jade.compile(buf, options);

|

||||

var output = options.client

|

||||

? fn.toString()

|

||||

: fn(options);

|

||||

process.stdout.write(output);

|

||||

}).resume();

|

||||

}

|

||||

|

||||

/**

|

||||

* Process the given path, compiling the jade files found.

|

||||

* Always walk the subdirectories.

|

||||

*/

|

||||

|

||||

function renderFile(path) {

|

||||

var re = /\.jade$/;

|

||||

fs.lstat(path, function(err, stat) {

|

||||

if (err) throw err;

|

||||

// Found jade file

|

||||

if (stat.isFile() && re.test(path)) {

|

||||

fs.readFile(path, 'utf8', function(err, str){

|

||||

if (err) throw err;

|

||||

options.filename = path;

|

||||

var fn = jade.compile(str, options);

|

||||

var extname = options.client ? '.js' : '.html';

|

||||

path = path.replace(re, extname);

|

||||

if (program.out) path = join(program.out, basename(path));

|

||||

var dir = resolve(dirname(path));

|

||||

mkdirp(dir, 0755, function(err){

|

||||

if (err) throw err;

|

||||

var output = options.client

|

||||

? fn.toString()

|

||||

: fn(options);

|

||||

fs.writeFile(path, output, function(err){

|

||||

if (err) throw err;

|

||||

console.log(' \033[90mrendered \033[36m%s\033[0m', path);

|

||||

});

|

||||

});

|

||||

});

|

||||

// Found directory

|

||||

} else if (stat.isDirectory()) {

|

||||

fs.readdir(path, function(err, files) {

|

||||

if (err) throw err;

|

||||

files.map(function(filename) {

|

||||

return path + '/' + filename;

|

||||

}).forEach(renderFile);

|

||||

});

|

||||

}

|

||||

});

|

||||

}

|

||||

33

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/mkdirp

generated

vendored

Executable file

33

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/mkdirp

generated

vendored

Executable file

|

|

@ -0,0 +1,33 @@

|

|||

#!/usr/bin/env node

|

||||

|

||||

var mkdirp = require('../');

|

||||

var minimist = require('minimist');

|

||||

var fs = require('fs');

|

||||

|

||||

var argv = minimist(process.argv.slice(2), {

|

||||

alias: { m: 'mode', h: 'help' },

|

||||

string: [ 'mode' ]

|

||||

});

|

||||

if (argv.help) {

|

||||

fs.createReadStream(__dirname + '/usage.txt').pipe(process.stdout);

|

||||

return;

|

||||

}

|

||||

|

||||

var paths = argv._.slice();

|

||||

var mode = argv.mode ? parseInt(argv.mode, 8) : undefined;

|

||||

|

||||

(function next () {

|

||||

if (paths.length === 0) return;

|

||||

var p = paths.shift();

|

||||

|

||||

if (mode === undefined) mkdirp(p, cb)

|

||||

else mkdirp(p, mode, cb)

|

||||

|

||||

function cb (err) {

|

||||

if (err) {

|

||||

console.error(err.message);

|

||||

process.exit(1);

|

||||

}

|

||||

else next();

|

||||

}

|

||||

})();

|

||||

72

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/mocha

generated

vendored

Executable file

72

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/mocha

generated

vendored

Executable file

|

|

@ -0,0 +1,72 @@

|

|||

#!/usr/bin/env node

|

||||

|

||||

/**

|

||||

* This tiny wrapper file checks for known node flags and appends them

|

||||

* when found, before invoking the "real" _mocha(1) executable.

|

||||

*/

|

||||

|

||||

var spawn = require('child_process').spawn,

|

||||

path = require('path'),

|

||||

fs = require('fs'),

|

||||

getOptions = require('./options'),

|

||||

args = [path.join(__dirname, '_mocha')];

|

||||

|

||||

// Load mocha.opts into process.argv

|

||||

// Must be loaded here to handle node-specific options

|

||||

getOptions();

|

||||

|

||||

process.argv.slice(2).forEach(function(arg){

|

||||

var flag = arg.split('=')[0];

|

||||

|

||||

switch (flag) {

|

||||

case '-d':

|

||||

args.unshift('--debug');

|

||||

args.push('--no-timeouts');

|

||||

break;

|

||||

case 'debug':

|

||||

case '--debug':

|

||||

case '--debug-brk':

|

||||

args.unshift(arg);

|

||||

args.push('--no-timeouts');

|

||||

break;

|

||||

case '-gc':

|

||||

case '--expose-gc':

|

||||

args.unshift('--expose-gc');

|

||||

break;

|

||||

case '--gc-global':

|

||||

case '--es_staging':

|

||||

case '--no-deprecation':

|

||||

case '--prof':

|

||||

case '--log-timer-events':

|

||||

case '--throw-deprecation':

|

||||

case '--trace-deprecation':

|

||||

case '--use_strict':

|

||||

case '--allow-natives-syntax':

|

||||

case '--perf-basic-prof':

|

||||

args.unshift(arg);

|

||||

break;

|

||||

default:

|

||||

if (0 == arg.indexOf('--harmony')) args.unshift(arg);

|

||||

else if (0 == arg.indexOf('--trace')) args.unshift(arg);

|

||||

else if (0 == arg.indexOf('--max-old-space-size')) args.unshift(arg);

|

||||

else args.push(arg);

|

||||

break;

|

||||

}

|

||||

});

|

||||

|

||||

var proc = spawn(process.execPath, args, { stdio: 'inherit' });

|

||||

proc.on('exit', function (code, signal) {

|

||||

process.on('exit', function(){

|

||||

if (signal) {

|

||||

process.kill(process.pid, signal);

|

||||

} else {

|

||||

process.exit(code);

|

||||

}

|

||||

});

|

||||

});

|

||||

|

||||

// terminate children.

|

||||

process.on('SIGINT', function () {

|

||||

proc.kill('SIGINT'); // calls runner.abort()

|

||||

proc.kill('SIGTERM'); // if that didn't work, we're probably in an infinite loop, so make it die.

|

||||

});

|

||||

133

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/semver

generated

vendored

Executable file

133

.vscode/extensions/saviorisdead.RustyCode-0.18.0/node_modules/.bin/semver

generated

vendored

Executable file

|

|

@ -0,0 +1,133 @@

|

|||

#!/usr/bin/env node

|

||||

// Standalone semver comparison program.

|

||||

// Exits successfully and prints matching version(s) if

|

||||

// any supplied version is valid and passes all tests.

|

||||

|

||||

var argv = process.argv.slice(2)

|

||||

, versions = []

|

||||

, range = []

|

||||

, gt = []

|

||||

, lt = []

|

||||

, eq = []

|

||||

, inc = null

|

||||

, version = require("../package.json").version

|

||||

, loose = false

|

||||

, identifier = undefined

|

||||

, semver = require("../semver")

|

||||

, reverse = false

|

||||

|

||||

main()

|

||||

|

||||

function main () {

|

||||

if (!argv.length) return help()

|

||||

while (argv.length) {

|

||||

var a = argv.shift()

|

||||

var i = a.indexOf('=')

|

||||

if (i !== -1) {

|

||||

a = a.slice(0, i)

|

||||

argv.unshift(a.slice(i + 1))

|

||||

}

|

||||

switch (a) {

|

||||

case "-rv": case "-rev": case "--rev": case "--reverse":

|

||||

reverse = true

|

||||

break

|

||||

case "-l": case "--loose":

|

||||

loose = true

|

||||

break

|

||||

case "-v": case "--version":

|

||||

versions.push(argv.shift())

|

||||

break

|

||||

case "-i": case "--inc": case "--increment":

|

||||

switch (argv[0]) {

|

||||

case "major": case "minor": case "patch": case "prerelease":

|

||||

case "premajor": case "preminor": case "prepatch":

|

||||

inc = argv.shift()

|

||||

break

|

||||

default:

|

||||

inc = "patch"

|

||||

break

|

||||

}

|

||||

break

|

||||

case "--preid":

|

||||

identifier = argv.shift()

|

||||

break

|

||||

case "-r": case "--range":

|

||||

range.push(argv.shift())

|

||||

break

|

||||

case "-h": case "--help": case "-?":

|

||||

return help()

|

||||

default:

|

||||

versions.push(a)

|

||||

break

|

||||

}

|